A geeky deep-dive: database deduplication to identify victims of human rights violations

In our work, we merge many databases to figure out how many people have been killed in violent conflict. Merging is a lot harder than you might think.

In our work, we merge many databases to figure out how many people have been killed in violent conflict. Merging is a lot harder than you might think.

Many of the database records refer to the same people–the records are duplicated. We want to identify and link all the records that refer to the same victims so that each victim is counted only once, and so that we can use the structure of overlapping records to do multiple systems estimation.

Merging records that refer to the same person is called entity resolution, database deduplication, or record linkage. For definitive overviews of the field, see Scheuren, Herzog, and Winkler, Data Quality and Record Linkage Techniques (2007), and Peter Christen, Data Matching: Concepts and Techniques for Record Linkage, Entity Resolution, and Duplicate Detection (2012).

Database deduplication has been an active research area in statistics and computer science for decades. If records lack a unique identifier, like a social security number, finding the same person among many records may be hard because records that refer to the same person may have slightly different information. Sometimes names are spelled a little bit differently, sometimes birth or death dates are slightly different, sometimes different people have the same names, and so forth. In our work studying lists of people killed in violent conflicts, the records usually have imprecise information.

Database deduplication is also hard because there can be a lot of records involved. In our work on homicides in Colombia 2003-2011, we processed over 460,000 records. In our current work on Syria, we’re managing about 360,000 records. It’s a lot of data.

We’ve written about our database deduplication framework before. In 2013, Jule Krüger wrote this post, Amelia Hoover Green wrote this post, and Anita Gohdes wrote this post.

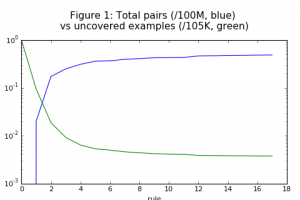

Database deduplication is so hard that in my five-part post, I’m only going to talk about the first step in record linkage, called blocking or indexing. In blocking, the goal is to reduce the number of records we consider as possible pairs so that we can calculate the likely matches without running for weeks. I show how we reduce the comparisons among the Syria datasets from 65 billion possible pairs of records to about 43 million pairs, a thousand-fold reduction. It’s a very geeky post in which I dig into the technical details, link to some of the key academic and applied work in this area, and I show actual code (in python and pandas) that does the work. It’s written in the jupyter notebook, which is a computing environment I’m in love with right now. Let’s dig in!

part 1 | part 2 | part 3 | part 4 | part 5