How we make sure that nobody is counted twice: A peek into HRDAG's record de-duplication

HRDAG is currently evaluating the quality and completeness of the Kosovo Memory Book of the Humanitarian Law Center (HLC) in Belgrade, Serbia. The objective of the Kosovo Memory Book (KMB) is to commemorate every single person who fell victim to armed conflict in Kosovo from 1998 to 2000, either through death or disappearance.

While building and reviewing their database, one of the things that HLC has to do is “record linkage,” a process also known as “matching.” Matching determines whether two records are the same people (“a match”) or different people (“a non-match”). Matching helps to identify whether two existing records refer to the same individual. Such effort allows us to remove duplicates from data and ensures that nobody is counted twice, an important aspect of data quality.

At HRDAG, we teach computers to classify records into matches and non-matches, because matching is not as trivial as it may sound initially. To illustrate the difficulty of this decision process, imagine you have two records of individuals with the same or very similar names. In order to decide whether these two records truly represent a match, you will probably look for (dis)agreement on further fields, such as dates and locations of birth, death or disappearance. To further complicate this issue, keep in mind that information can be erroneously reported (think of typos!), or that in ethnically diverse settings such as Kosovo, names of individuals and locations can be transcribed differently (Albanian vs. Serbian vs. English transcriptions). And did I mention issues of partial, missing or conflictingly reported information across different primary sources?

Obviously, such complexity can make it very tricky for the human brain and eye to perform matching decisions in a consistent and reliable manner—over time and across different staff. To systematically control the matching process, our HRDAG team member Dr. Jeff Klingner developed an automated record linkage software that we already used in previous projects to match records of victims during episodes of violence, in places such as Guatemala, Colombia, or recently Syria.

I am currently using this record linkage software to hunt for duplicates in the HLC database, among other things. Doing so I first had to build a training set. This training set (TS) contains pairs of records that I review by hand. At the moment there are more than 1100 hand-labelled pairs, and counting, in the TS, denoted as either a match or a non-match. This may sound like a lot of manual labour. But actually I am dealing with 32423 input records from eight different data sources on victims in Kosovo to begin with and I do not want to review every possible pairing of that many records, which by the general formula is Nx(N-1)/2, or 525 609 253! Therefore the automation.

Along with the TS, I build record comparators. Record comparators (RC) contain comparison functions that evaluate similarity within record pairs for the different data fields (names, dates, locations, etc.). For example, I am using an RC that compares the similarity of two names based on how many times they need to be edited to make them the same. The smaller this measure of “edit distance,” the more likely two records are a match. Another RC measures the time between two dates of birth or death, with smaller or no time distance indicating a higher likelihood of two records being a match. The RCs return “feature vectors,” (fv) which contain measures of similarity for each record pair in numerical terms, e.g., fv=(edit.distance=0.3, date.distance=0.7). These vectors can be summarized to one final measure of confidence for whether a pair of records is deemed a match or not.

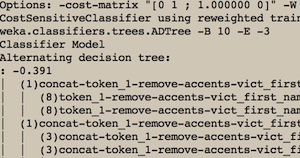

The RCs are run on the training pairs and the similarity information is used to build a decision model, in my case an Alternating Decision Tree (ADTree) from the Weka library for various data mining tasks. The ADTree is a machine learning algorithm that teaches my computer how to classify record pairs as matches or non-matches by exploiting the similarity information given in the RC feature vectors of the human-made example pairs in the TS. Having built the decision model, my computer is trained enough to start working on all record pairs.

At this point in the project I am not yet able to generate all pairs of record combinations for classification, as 32423x(32423-1)/2 candidate pairs knock out my machine—too much computing going on. Therefore, I am currently formulating a set of rejection rules that reject all candidate pairs that are very unlikely to be a match in the first place. For example, two individuals with three or more years difference in age and of opposite sex are very likely a non-match. I do not need to feed such pair to the automated matcher, which allows me to save computing time and power. For now, I am approximating pair generation with a set of simple algorithms that create reasonable candidate pairs, such as creating pairs of any records with the same year of birth, death, etc.

The program uses the RCs to obtain similarity information for each candidate pair and classifies them as matches or non-matches based on the decision model. Records classified as matches are grouped in clusters, or “match groups.” The clustering algorithm is average linkage clustering taken from hierarchical cluster analysis. In a final step, records within a cluster—these are all determined positive matches and, if coming from the same data source, are “duplicates”—are merged into one single record. Again we use some simple algorithms to preserve the most specific information on each field inside a cluster. For example, reconciling slight disagreement between records can be done by preferring a specific data source we have come to trust more, often it means preferring exact to partial information.

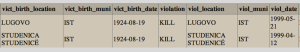

One example of a cluster that represents a duplicate in the HLC database, part 1.(Click for full image.)

While this process is very straightforward, in reality the training or “machine learning” is done in a loop. Whenever the model classifies pairs as matches or non-matches, it issues pairs of records that are of low-confidence, i.e., the program is not sure whether it has classified these pairs correctly. It also issues pairs of records that the model classifies differently from the human matcher. Such requested pairs need to be reviewed by me, labelled, and added to the current TS to update the existing decision model and then rerun the automated matcher on the full set of candidate pairs. Another way to train the model is by breaking apart match groups (the “clusters”) that were wrongly classified (so-called “false positives”) and adding these as example pairs of non-matches such that the classifier learns to better discriminate.

Part 2 of above cluster. Compare both rows and note the slight differences across the various data fields. (Click for full image.)

In sum, automated matching is an iterative process. And while the machine is learning, the human matcher —me—is learning just as well.

In a future post, we will address how to evaluate the performance of the automated matching to decide when the record linkage is accomplished. The main concepts behind HRDAG’s automated matching can be found in this book on data quality and record linkage by Herzog et al. You can find more detail and citations underlying HRDAG’s approach to matching in the corresponding section of our recent Syria report.

[Creative Commons BY-NC-SA, including images]