Report on Measures of Fairness in NYC Risk Assessment Tool

Today HRDAG published a report titled, Measures of Fairness for New York City’s Supervised Release Risk Assessment Tool, an analysis based on datasets provided by the Criminal Justice Agency (CJA), a nonprofit organization, and the New York State Division of Criminal Justice Services (DCJS). The report is one response to concern expressed by advocates and researchers regarding risk assessment models. The report tries to answer the question of whether a particular risk assessment model reinforces racial inequalities in the criminal justice system.

Today HRDAG published a report titled, Measures of Fairness for New York City’s Supervised Release Risk Assessment Tool, an analysis based on datasets provided by the Criminal Justice Agency (CJA), a nonprofit organization, and the New York State Division of Criminal Justice Services (DCJS). The report is one response to concern expressed by advocates and researchers regarding risk assessment models. The report tries to answer the question of whether a particular risk assessment model reinforces racial inequalities in the criminal justice system.

In the report, co-authors Kristian Lum and Tarak Shah evaluate a specific risk assessment tool used in New York City; the tool was designed by CJA as a statistical model to predict the likelihood that an arrested person, or “defendant,” will be re-arrested for a felony during the pre-trial period, and was used to screen defendants for acceptance into a pretrial supervised release program. The report was based on several common measures of fairness.

“An important lesson from this process is the need for transparency in the development of these risk assessment tools,” said Tarak Shah, data scientist at HRDAG. “It was only through the process of trying to re-build this model from the original training data that was provided to us that we discovered that the scores used in the tool do not come directly from the model-fitting process.”

Some of the key findings of the report include:

- The training data may itself encode racial bias into the tool, since comes from the peak years of “Stop, Question, and Frisk,” a policing practice found by courts to be racially discriminatory.

- The point values used by the tool were not derived directly from a fitted model, but rather reflect an ad hoc “compromise” process, highlighting how different ethical, political, or values-based considerations may be embedded in the tool that are not entirely data-based.

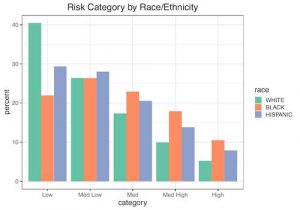

- In this case, Black defendants were about twice as likely as White defendants to be made ineligible for the supervised release program based on the risk assessment. Hispanic defendants were about 1.5 times as likely to be ineligible as White defendants. Thus, this tool has the potential to disproportionately impact communities of color relative to White communities by denying access to a potentially beneficial program at a higher rate. (N.B. The supervised release model predicted felony re-arrest with the similar accuracy across races, but if adhered to consistently it would make more Black and Hispanic defendants ineligible for the supervised release program as compared to White defendants. Thus, this tool has the potential to disproportionately impact communities of color relative to White communities by denying access to a potentially beneficial program.)

- Ultimately, whether a risk assessment is considered ‘fair’ is dependent on the definition of fairness used, and different definitions could result in different conclusions regarding to whom the risk assessment model is unfair, if at all.

Acknowledgments

The report was supported by the The Ethics and Governance of AI Initiative, the Ford Foundation, the MacArthur Foundation, and the Open Society Foundation. Data were provided by the CJA and DCJS, although neither organization or agency was involved in the writing of the report.