Multiple Systems Estimation: Collection, Cleaning and Canonicalization of Data

<< Previous post: MSE: The Basics

Q3. What are the steps in an MSE analysis?

Q5. [In depth] Do you include unnamed or anonymous victims in the matching process?

Q6. What do you mean by “cleaning” and “canonicalization?”

Q7. [In depth] What are some of the challenges of canonicalization?

Q3. What are the steps in an MSE analysis?

The process varies somewhat from case to case. However, every MSE estimate shares several key steps, which will be described in greater detail, below:

Data collection (Q4, Q5)

Cleaning and canonicalization (Q6, Q7)

Matching, also called de-duplication or record linkage (Q8, Q9)

Stratification (Q10, Q11)

Estimation (Q12–Q16)

Q4. What does data collection look like in the human rights context? What kind of data do you collect?

“Data collection” can mean several things in the human rights context. In some cases, such as our work with the Timor-Leste Commission for Reception, Truth and Reconciliation (CAVR, in its Portuguese acronym), we partner with organizations during the initial phases of data collection. Participating in data-gathering from the outset facilitates later analyses. In these projects, we emphasize the use of controlled vocabularies as data are coded. A controlled vocabulary is a pre-selected set of well-defined categories that do not overlap, and that all trained coders can apply consistently and accurately. The accuracy and consistency of coding can be measured by comparing what all the coders do when given the same document. Their level of agreement is called inter-rater (or inter-coder) reliability (IRR), and high IRR is crucial to a high quality data collection project.

While analysis may be simpler when our team advises data collection and coding, this is seldom possible. More frequently, we receive “found data” from partners who have already collected testimonies or lists of violations. (On occasion we also work with literally “found” data; for example, border crossing records in Kosovo or abandoned paperwork in Chad.) When the data source has already been collected, we work with our partners to consider their analytical goals. We have advised several organizations, such as the Colombian Commission of Jurists, on issues of data collection, coding and de-duplication.

Q5. [In depth] Do you include unnamed or anonymous victims in the matching process?

We do not use unnamed or anonymous reports for MSE, but we do use such information for other purposes. We often receive data in which some victims are unnamed. If other information about the victim is available (e.g., sex, location, etc.) then these anonymous records can still be used as the basis of descriptive statistics. However, while it is theoretically possible to match anonymous records when enough other information exists, it is not practically feasible. (For example, if each of two reports refers to an unnamed group of victims—say, one group of five victims and one group of seven—we can never be sure if one victim group is a subset of the other, if they share some but not all victims, or if they refer to completely different events. Therefore, while we occasionally include anonymous victims in descriptive statistics (or even comparisons of datasets), we never include anonymous victims in MSE estimates, because we cannot include them in the matching process.

Q6. What do you mean by “cleaning” and “canonicalization”?

For us, “data cleaning” is a process that applies to a single dataset. It usually means making sure that data are entered and represented correctly–for example, by removing out-of-bounds values, checking for typos afflicting data entry, addressing problems of missing data, or making sure that data values are consistent. (For example, if you’ve coded the variable “sex” as “M” and “F,” you shouldn’t see numbers in that column.)

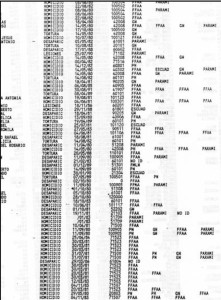

Canonicalization typically happens after cleaning (not always). By “canonicalization” we mean the process of unifying variable names and values, to the extent possible, across several datasets. Canonicalization begins with several datasets whose variable names and values may not match, or which may not be coded at the same level(s) of specificity. After renaming and possibly recoding variables, its final result is a single merged dataset that includes all observations from all component datasets.

Q7. [In depth] What are some of the challenges of canonicalization?

Let’s take the example of three datasets, each of which codes for sex (as in the question above). One dataset’s variable sex has the values “M” and “F,” while a second dataset’s variable SEX uses “Male,” “Female,” and “Unknown,” and a third dataset has a variable called gender that uses 1 for male, 0 for female, 88 for “prefer not to say” and 99 for “unknown.” In this case, the analyst requires a system that incorporates all these possible values under a unified name and coding scheme. Perhaps we recode “M” as 1, “F” as 0, “Male” as 1, “Female” as 0, and “Unknown” as 99, and name the new variable sex.

But is there hidden complexity in this example? What if the dataset with values “M,” “F,” and “U” includes in the “unknown” category some individuals who are marked as “unknown” because they preferred not to say? Post hoc, there is no way to determine whether this is the case, and the most conservative strategy is to remove the “prefer not to say” code and recode these and all “unknown” observations as “unknown or prefer not to say.”

A somewhat analogous situation occurs when the variable(s) at issue describe human rights violations. It is extremely important that each dataset utilize a controlled vocabulary as it codes violations. However, different datasets may use very different vocabularies, such that violation types may not match in a one-to-one way across datasets. If one group uses only the values “torture” and “lethal violence,” but another dataset lists “physical torture,” “psychological torture,” “attempted murder,” “disappearance,” and “homicide,” it is vital that we determine how these violation types are defined, so that the datasets can be accurately compared and, eventually, matched.

While these types of decisions are central to the canonicalization process, there are other important tasks completed at this stage as well, including more complex schema matching, character set encodings, and parsing names, addresses, and locations into sub-parts (first, middle, last, house number, street, neighborhood, city, etc.).

The next post in the series is MSE: The Matching Process >>

[Creative Commons BY-NC-SA, excluding image]